Section: New Results

Multi-shot Person Re-identification in surveillance videos

Participants : Furqan Khan, Seongro Yoon, François Brémond.

keywords: person re-identification, appearance modeling, long term visual tracking

Efficient Video Summarization Using Principal Person Appearance for Video-Based Person Re-Identification

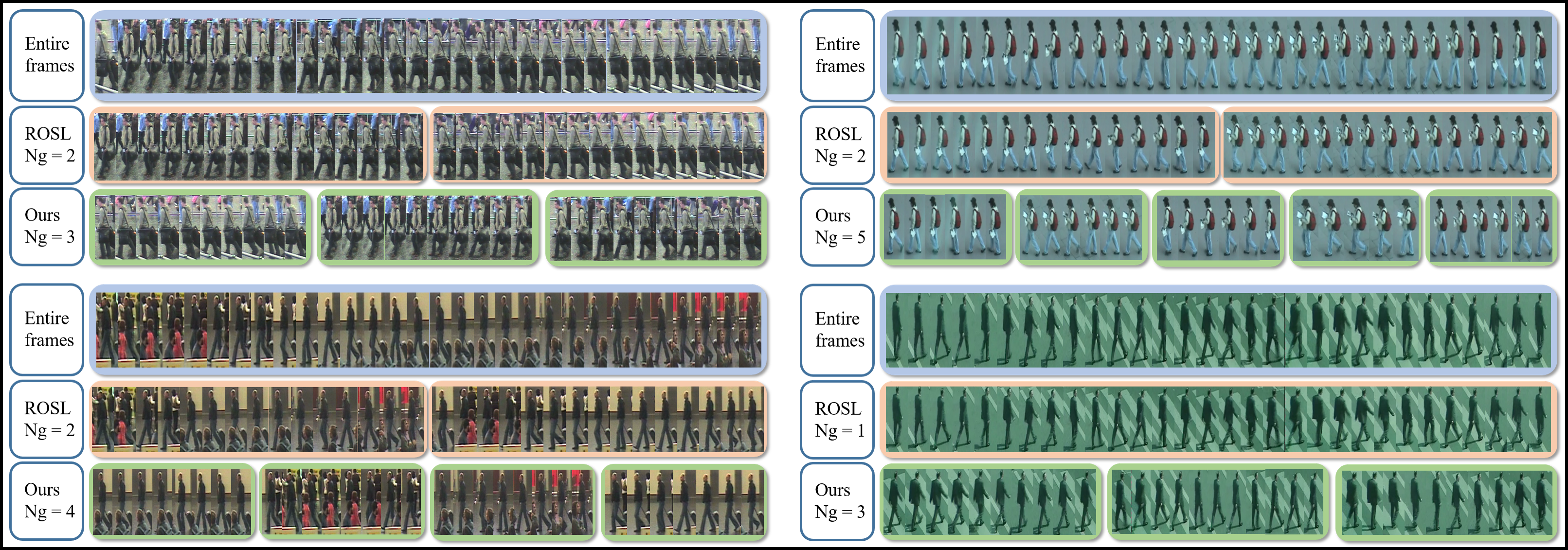

In video-based person re-identification, while most work has focused on problems of person signature representation and matching between different cameras, intra-sample variance is also a critical issue to be addressed. There are various factors that cause the intra-sample variance such as detection/tracking inconsistency, motion change and background. However, finding individual solutions for each factor is difficult and complicated. To deal with the problem collectively, we assume that it is more effective to represent a video with signatures based on a few of the most stable and representative features rather than extract from all video frames. In this work, we propose an efficient approach to summarize a video into a few of discriminative features given those challenges. Primarily, our algorithm learns principal person appearance over an entire video sequence, based on low-rank matrix recovery method. We design the optimizer considering temporal continuity of the person appearance as a constraint on the low-rank based manner. In addition, we introduce a simple but efficient method to represent a video as groups of similar frames using recovered principal appearance. Experimental results (Table 5) show that our algorithm combined with conventional matching methods outperforms state-of-the-art on publicly available datasets PRID2011 [77] and iLIDS-VID [125].

| PRID | iLIDS-VID | |||||||

| Method | r=1 | r=5 | r=10 | r=20 | r=1 | r=5 | r=10 | r=20 |

| HOG3D+RankSVM [125] | 19.4 | 44.9 | 59.3 | 77.2 | 12.1 | 29.3 | 41.5 | 56.3 |

| Color+RankSVM [125] | 29.7 | 49.4 | 59.3 | 71.1 | 16.4 | 37.3 | 48.5 | 62.6 |

| DVR [125] | 28.9 | 55.3 | 65.5 | 82.8 | 23.3 | 42.4 | 55.3 | 68.6 |

| ColorLBP [78]+RankSVM | 34.3 | 56.0 | 65.5 | 77.3 | 23.2 | 44.2 | 54.1 | 68.8 |

| DVDL [80] | 40.6 | 69.7 | 77.8 | 85.6 | 25.9 | 48.2 | 57.3 | 68.9 |

| Color+LFDA [106] | 43.0 | 73.1 | 82.9 | 90.3 | 28.0 | 55.3 | 70.6 | 88.0 |

| AFDA [88] | 43.0 | 72.7 | 84.6 | 91.9 | 37.5 | 62.7 | 73.0 | 81.8 |

| DSVR [126] | 40.0 | 71.1 | 84.5 | 92.2 | 39.5 | 61.1 | 71.7 | 81.0 |

| MTL-LORAE [119] | - | - | - | - | 43.0 | 60.1 | 70.3 | 85.3 |

| STFV3D+KISSME [93] | 64.1 | 87.3 | 89.9 | 92.0 | 43.8 | 69.3 | 80.0 | 90.0 |

| CNN+KISSME [142] | 69.9 | 90.6 | - | 98.2 | 48.8 | 75.6 | - | 92.6 |

| RFA-Net+RankSVM [131] | 58.2 | 85.8 | 93.4 | 97.9 | 49.3 | 76.8 | 85.3 | 90.0 |

| CNN+XQDA [142] | 77.3 | 93.5 | - | 99.3 | 53.0 | 81.4 | - | 95.1 |

| APR+XQDA [73] | 68.6 | 94.6 | 97.4 | 98.9 | 55.0 | 87.5 | 93.8 | 97.2 |

| TDL [133] | 56.7 | 80.0 | 87.6 | 93.6 | 56.3 | 87.6 | 95.6 | 98.3 |

| RCNN [96] | 70.0 | 90.0 | 95.0 | 97.0 | 58.0 | 84.0 | 91.0 | 96.0 |

| PPA+Euclidean | 66.6 | 90.1 | 93.5 | 96.7 | 29.6 | 55.7 | 67.6 | 79.7 |

| PPA+KISSME | 85.7 | 98.9 | 99.9 | 100.0 | 65.7 | 92.3 | 96.8 | 99.1 |

| PPA+XQDA | 87.6 | 99.2 | 99.6 | 99.9 | 66.8 | 93.9 | 97.8 | 99.8 |

In order to get a deeper insight, Figure 15 presents some qualitative results visualizing principal appearance groups discovered by our approach without manual supervision. We compare our results with the approach of Shu et.al. [116] and found our results to be relatively more visually coherent and to have more groups. Details of this work can be found in our BMVC paper [36].

|

Multi-shot Person Re-identification using Part Appearance Mixture

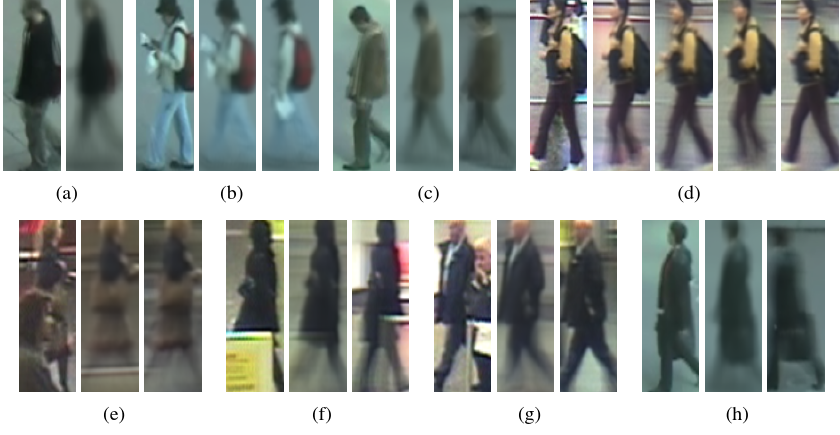

Appearance based person re-identification in real-world video surveillance systems is a challenging problem for many reasons, including ineptness of existing low level features under significant viewpoint, illumination, or camera characteristic changes to robustly describe a person's appearance. One approach to handle appearance variability is to learn similarity metrics or ranking functions to implicitly model appearance transformation between cameras for each camera pair, or group, in the system. The alternative, that is followed in this work, is the more fundamental approach of improving appearance descriptors, called signatures, to cater for high appearance variance and occlusions. A novel signature representation for multi-shot person re-identification, called Part Appearance Mixture (PAM), is henceforth presented that uses multiple appearance models, each describing appearance as a probability distribution of a low-level feature for a certain portion of an individual's body. It caters for high variance in a person's appearance by automatically trading compactness with variability as can be visually seen in the results presented in Figure 16.

|

Signature representation has probabilistic interpretation of appearance signatures that allows for application of information theoretic similarity measures. A signature is acquired over coarsely localized body regions of a person in a computationally efficient manner instead of reliance on fine parts localization. We also define a Mahalanobis based distance measure to compute similarity between two signatures. The metric is also amenable to existing metric learning methods and appearance transformation between different scenes can be learned directly using proposed signature representation. Combined with metric learning, rank-1 recognition rates of and are achieved on PRID2011 [77] and iLIDS-VID [125] datasets, respectively, which establish a new state-of-the-art on both the datasets. Detailed comparisons with other contemporary unsupervised and supervised re-identification methods are presented in table 6 and table 7.

| Spatiotemporal Methods | ||||||||

| PRID2011 | iLIDS-VID | |||||||

| Method | r=1 | r=5 | r=10 | r=20 | r=1 | r=5 | r=10 | r=20 |

| HOG3D [93] | 20.7 | 44.5 | 57.1 | 76.8 | 8.3 | 28.7 | 38.3 | 60.7 |

| FV3D [93] | 38.7 | 71.0 | 80.6 | 90.3 | 25.3 | 54.0 | 68.3 | 87.7 |

| STFV3D [93] | 42.1 | 71.9 | 84.4 | 91.6 | 37.0 | 64.3 | 77.0 | 86.9 |

| Spatial Methods | ||||||||

| PRID2011 | iLIDS-VID | |||||||

| Method | r=1 | r=5 | r=10 | r=20 | r=1 | r=5 | r=10 | r=20 |

| SDALF [68] | 5.2 | 20.7 | 32.0 | 47.9 | 6.3 | 18.8 | 27.1 | 37.3 |

| eSDC [141] | 25.8 | 43.6 | 52.6 | 62.0 | 10.2 | 24.8 | 35.5 | 52.9 |

| FV2D [94] | 33.6 | 64.0 | 76.3 | 86.0 | 18.2 | 35.6 | 49.2 | 63.8 |

| PAM-HOG | 50.6 | 72.2 | 83.6 | 93.0 | 22.9 | 44.3 | 55.7 | 69.3 |

| PAM-LOMO | 70.6 | 90.2 | 94.6 | 97.1 | 33.3 | 57.8 | 68.5 | 80.5 |

| Dictionary or Feature Learning Methods | ||||||||

| PRID2011 | iLIDS-VID | |||||||

| Method | r=1 | r=5 | r=10 | r=20 | r=1 | r=5 | r=10 | r=20 |

| DVDL [80] | 40.6 | 69.7 | 77.8 | 85.6 | 25.9 | 48.2 | 57.3 | 68.9 |

| Color+LFDA [106] | 43.0 | 73.1 | 82.9 | 90.3 | 28.0 | 55.3 | 70.6 | 88.0 |

| AFDA [88] | 43.0 | 72.7 | 84.6 | 91.9 | 37.5 | 62.7 | 73.0 | 81.8 |

| MTL-LORAE [119] | - | - | - | - | 43.0 | 60.1 | 70.3 | 85.3 |

| RCNN [96] | 70.0 | 90.0 | 95.0 | 97.0 | 58.0 | 84.0 | 91.0 | 96.0 |

| Metric or Rank Learning Methods | ||||||||

| PRID2011 | iLIDS-VID | |||||||

| Method | r=1 | r=5 | r=10 | r=20 | r=1 | r=5 | r=10 | r=20 |

| HOG3D+RankSVM [125] | 19.4 | 44.9 | 59.3 | 77.2 | 12.1 | 29.3 | 41.5 | 56.3 |

| Color+RankSVM [125] | 29.7 | 49.4 | 59.3 | 71.1 | 16.4 | 37.3 | 48.5 | 62.6 |

| ColorLBP [78]+RankSVM | 34.3 | 56.0 | 65.5 | 77.3 | 23.2 | 44.2 | 54.1 | 68.8 |

| DVR [125] | 28.9 | 55.3 | 65.5 | 82.8 | 23.3 | 42.4 | 55.3 | 68.6 |

| DSVR [126] | 40.0 | 71.1 | 84.5 | 92.2 | 39.5 | 61.1 | 71.7 | 81.0 |

| STFV3D+KISSME [93] | 64.1 | 87.3 | 89.9 | 92.0 | 43.8 | 69.3 | 80.0 | 90.0 |

| LOMO+XQDA [90] | - | - | - | - | 53.0 | 78.5 | 86.9 | 93.4 |

| LOMO+SBSR+XQDA [53] | - | - | - | - | 68.5 | 87.9 | 93.0 | 96.3 |

| RFA-Net+RankSVM [131] | 58.2 | 85.8 | 93.4 | 97.9 | 49.3 | 76.8 | 85.3 | 90.0 |

| CNN+KISSME [142] | 69.9 | 90.6 | - | 98.2 | 48.8 | 75.6 | - | 92.6 |

| CNN+XQDA [142] | 77.3 | 93.5 | - | 99.3 | 53.0 | 81.4 | - | 95.1 |

| PAM-HOG+KISSME | 55.3 | 80.7 | 90.2 | 95.6 | 33.9 | 60.0 | 70.2 | 79.1 |

| PAM-LOMO+KISSME | 92.5 | 99.3 | 100.0 | 100.0 | 79.5 | 95.1 | 97.6 | 99.1 |

For further details, please refer to our paper [31].